So, today morning woke up to a sunny morning. Folks might think this is normal, but ask Londoner’s !

And since ChatGPT window was open on my laptop since yesterday, I thought lets have some fun. Actually the intention was to find out the context length of a conversation and coherence.

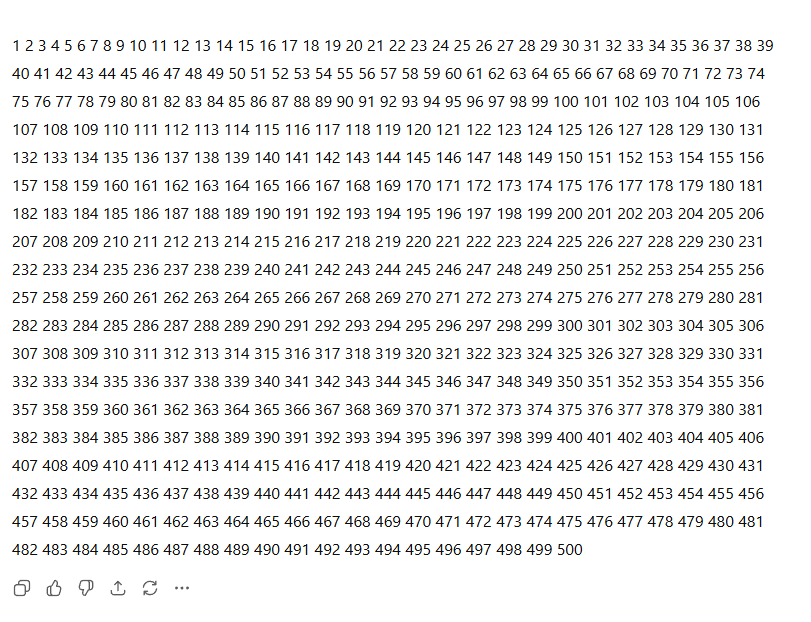

So I asked it to generate a sequence of numbers till 500 and it did a pretty decent job of handling this ask. For a human, this is where we begin our learning journey. Numbers, their written shapes and counting is something that we are taught at a nursery level. This does not need a huge amount of expertise for a human brain to tackle, and therefore it is not surprising that ChatGPT is also able to generate this sequence of numbers quite easily.

However, the trick starts after this. We have printed this number sequence. Once this is done, you ask GPT to start reciting this sequence back. That is where the fun part starts. Now if you see that the sequence is quite simple to understand. Any child who has basic primary level education would be able to see that there are spaces within these individual numbers and they will be recited as discrete numbers .

Here in this case however, ChatGPT fails miserably. It begins the task quite easily. Reciting from one to 10. However, once it goes into mid double digit numbers, that’s where it kind of starts to catastrophically fail and starts hallucinating. So you see numbers which are getting skipped, you see odd sounds which are coming out in different pronunciation. You see all kinds of funny things happening.

The question here is that we are all aware that chat, GPT and all elements operate on a hidden Markov chain which. Basically is a representation of probability of a sequence of events or elements occurring within the vector space. It really begs the question now that do we really base our life on probability or do we base our life on deterministic values? And it is my understanding that we do face most over decisions on deterministic values and factual values. Unless and until we come to a position where we do not have all the facts and then we try to take the best guess. The core difference here you see, is that for a LLM, every decision is a probabilistic decision, whereas for human there is a certain level of judgmental and deterministic aspect to it.

The question therefore to the larger audience is would you have Chat GPT decide things on your behalf, especially if it is not going to come back and ask you clarifying questions.

Leave a Reply